Have you ever waited for a price cut before buying something you like? You either get tired of checking the price every day or miss the discount. Many people have had this experience.

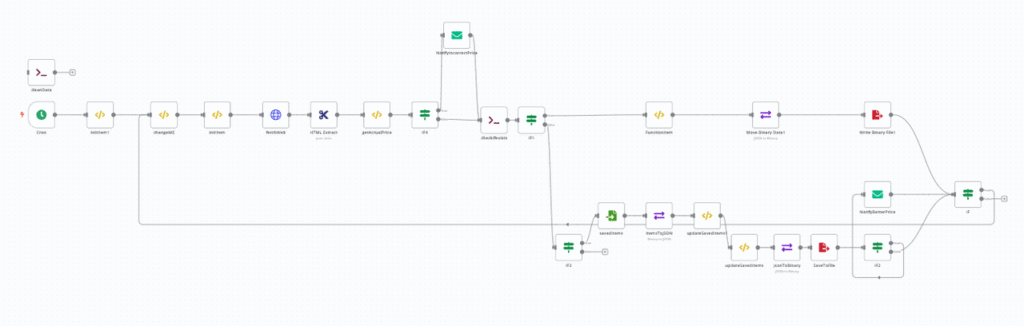

Actually, it doesn’t have to be that complicated! We can use n8n to implement a “product price monitoring workflow” that monitors price changes on multiple product web pages at regular intervals and sends notifications when prices drop or price extraction fails.

1. Core Purpose

Automatically tracks the price of products on specified e-commerce web pages, checking every 15 minutes. When the price drops, it sends an email notification. If price extraction fails (e.g., due to selector errors or changes in the web page structure), it also sends an error alert. Suitable for personal shopping price comparison, commercial competing product price monitoring, and other scenarios.

2. Key Nodes and Process Analysis

The entire workflow runs through the logic of timing trigger → loop processing products → price scraping → comparison with historical prices → sending notifications. The following are the functions of the core nodes:

1. Timed trigger (Cron node)

- Type:

cron - Function: Trigger the workflow every 15 minutes.。

- Function: Control the monitoring frequency to ensure regular price checks.

2. Define the list of monitored products (changeME node)

- Type:

functionItem(custom function) - Function: Define the list of products to be monitored (

myWatchersarray), each product contains:slug: Unique identifier of the product (e.g.kopacky,mobilcek)link: Product page URL (such as Adidas shoes page);selector: CSS selector to extract price (e.g..prices > strong:nth-child(1) > span:nth-child(1));currency: Currency unit (e.g.EUR).

- Function: Configure monitoring targets. Users can add/modify products to be tracked here.

3. Loop through the products (initItem node)

- Type:

functionItem - Function: Record the index of the currently processed product through the global variable

globalData.iteration, take out the product information one by one from themyWatchersarray, and judge whether there is another product to be processed (canContinuefield). - Function: Implement multi-product cycle monitoring to ensure that each product is processed in turn.

4. Fetch Web Page and Extract Price (fetchWeb + HTML Extract Nodes)

- fetchWeb (

httpRequest) : Fetch the HTML content of the web page according to thelinkof the current product. - HTML Extract: Use the product’s

selector(CSS selector) to extract the price text (e.g. “99,99”) from the HTML. - Function: Get the original price data of the target web page.

5. Price cleaning and verification (getActualPrice node)

- Type:

functionItem - Function :

- Clean the extracted price text (e.g., “99,99”) to a number (replace the comma with a dot, convert to

float:99.99); - Verify price validity (

priceExists = price > 0); - Save the current price to the global variable

globalData.actualPrice.

- Clean the extracted price text (e.g., “99,99”) to a number (replace the comma with a dot, convert to

- Function: Ensure that the price format is correct and valid, and prepare for subsequent comparison.

6. Price validity judgment (IF4 node)

- Type:

if(conditional judgment) - Condition: Check if

priceExistsistrue(i.e., price is valid). - Branch :

- Invalid (

priceExists=false): Trigger theNotifyIncorrectPricenode and send an email reminder “Price extraction failed, please check the link or selector”. - Valid (

priceExists=true) : Continue with the subsequent price comparison process.

- Invalid (

7. Historical price storage and comparison (file operation + updateSavedItems node)

The workflow stores historical prices through the local file /data/kopacky.json , which is divided into two cases:

(1) First run (file does not exist)

checkifexistsnode (executeCommand) : execute the command to checkkopacky.jsonexists, if notstdoutis empty.IF1node: judge that the file does not exist, triggerFunctionItemnode to generate the initial product list JSON, throughMove Binary Data1after converting to binary, byWrite Binary File1writekopacky.json(the initial file contains the basic information of all monitored products, and the price is empty).

(2) Non-first run (file exists)

savedItemsnode (readBinaryFile) : read the historical price data inkopacky.json.itemsToJSONnode: Converts the binary file content to JSON format.updateSavedItems1node (functionItem) : Compare the current price with the historical price:- If the current price is lower than the historical price: update the historical price and record

oldPrice(old price); - If the historical price is empty (the product is captured for the first time), save the current price directly.

- If the current price is lower than the historical price: update the historical price and record

SaveToFilenode: Write the updated price data back tokopacky.json.

8. Better Price Notification (IF2 + NotifyBetterPrice Node)

IF2node (if) : Determine whether the current price is lower than the historical price (getActualPrice.json.price < updateSavedItems1.json.oldPrice).NotifyBetterPricenode (emailSend) : If the price drops, send an email notification containing:- New price, old price, product URL;

- Recipient: Subject: ` [Product slug] – [New price] [Currency] `.

9. Loop control (IF node)

- Type:

if - Condition: Check if

initItem.json.canContinueistrue(i.e., if there are still unprocessed products). - Function: If there is a next product, return to the

changeMEnode and continue the loop; if not, the workflow ends.

Summary

This workflow realizes the whole process automation of multi – product price timing monitoring → automatic crawling → historical comparison → abnormal / price reduction notification. Users only need to configure the URL and price selector of the product in the changeME node to get real – time price change reminders without manually checking the web page.

Applicable Scenarios

- Individual consumers track price drops of their favorite products (e.g. sneakers, electronics);

- Merchants monitor the prices of competing products and adjust their pricing strategies;

- Beginner crawlers learn the logic of dynamic web page data extraction and local storage.